Investigating Oklahoma's earthquake surge with R and ggplot2

Quick summary: This is a four-part walkthrough on how to collect, clean, analyze, and visualize earthquake data. While there is a lot of R code and charts, that’s just a result of me wanting to practice ggplot2. The main focus of this guide is to practice purposeful research and numerical reasoning as a data journalist.

Quick nav:

- The chapter summaries

- The full table of contents.

- The repo containing the notebooks, code, and data

- The repo in a zip file.

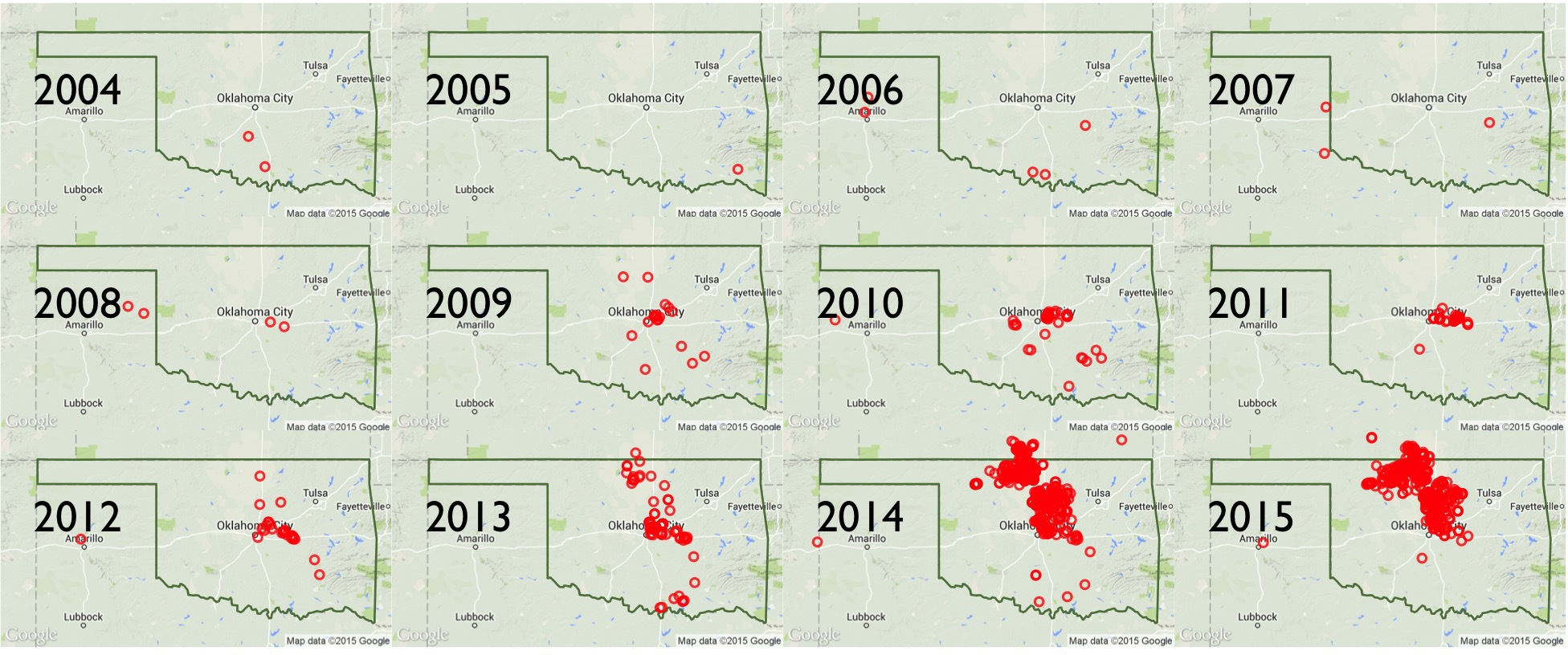

The main findings of this walkthrough summed up in an animated GIF composed of charts created with ggplot2:

- Meta stuff - A summary of what this walkthrough contains, the ideal intended audience, and technical details on how to configure your system and R setup similar to mine.

- Background into Oklahoma’s earthquakes - An overview of the scientific and political debates after Oklahoma’s earthquake activity reached a record high – in number and in magnitude – since 2010. And an overview of the main datasets we’ll use for our analysis.

- Basic R and ggplot2 concepts and examples - In this chapter, we’ll cover most of the basic R and ggplot2 conventions needed to do the necessary data-gathering/cleaning and visualizations in subsequent chapters.

- Exploring the historical earthquake data since 1995 - We repeat the same techniques in chapter 2, except instead of one month’s worth of earthquakes, we look at 20 years of earthquake data. The volume and scope of data requires different approaches in visualization and analysis.

- The Data Cleaning - By far the most soul-suckingly tedious and frustrating yet most necessary chapter in every data journalism project.

Key charts

Full table of contents

- Key charts

- Chapter 0: Meta stuff

- Chapter 1: Reading up on Oklahoma’s Earthquakes

- Chapter 2: Basic R and Data Visualization Concepts

- Chapter 3: Exploring Earthquake Data from 1995 to 2015

- Chapter 4: The Data Cleaning

Chapter 0: Meta stuff

Long summary of this walkthrough

This extremely verbose writeup consists of a series of R notebooks and aggregation of journalism and research into the recent “swarm” of earthquake activity in Oklahoma. It is now (and by “now”, I mean very recently) generally accepted by academia, industry, and the politicians, that the earthquakes are the result of drilling operations. So this walkthrough won’t reveal anything new to those who have been following the news. But I created the walkthrough to provide a layperson’s guide of how to go about researching the issue and also, how to create reproducible data process, including how to collect, clean, analyze, and visualize the earthquake data.

These techniques and principles are general enough to apply to any investigative data process. I use the earthquake data as an example because it is about as clean and straightforward as datasets come. However, we’ll find soon enough that it contains the same caveats and complexities that are inherent to all real-world datasets.

Spoiler alert. Let’s be clear: 20 years of earthquake data is not remotely enough for amateurs nor scientists to make sweeping conclusions about the source of recent earthquake activity. So I’ve devoted a good part of Chapter 4 to explaining the limitations of the data (and my knowledge) and pointing to resources that you can pursue on your own.

Intended audience

About the code

I explain as much of the syntax as I can in Chapter 2, but it won’t make sense if you don’t have some experience with ggplot2. The easiest way to follow along is clone the Github repo here:

https://github.com/dannguyen/ok-earthquakes-RNotebook

Or, download it from Github as a zip archive. The data/ directory contains mirrors of the data files used in each of the notebooks.

The code I’ve written is at a beginner’s level of R (i.e. where I’m at), with a heavy reliance on the ggplot2 visualization library, plus some Python and Bash where I didn’t have the patience to figure out the R equivalents. If you understand how to work with data frames and have some familiarity with ggplot2, you should be able to follow along. Rather than organize and modularize my code, I’ve written it in an explicit, repetitive way, so it’s easier to see how to iteratively build and modify the visualizations. The drawback is that there’s the amount of code is more intimidating.

My recommendation is to not learn ggplot2 as I initially did: by copying and pasting ggplot2 snippets without understanding the theory of ggplot2’s “grammar of graphics.” Instead, read ggplot2 creator Hadley Wickham’s book: ggplot2: Elegant Graphics for Data Analysis. While the book seems stuffy and intimidating, it is by far the best way to get up to speed on ggplot2, both because of ggplot2’s nuanced underpinnings and Wickham’s excellent writing style. You can buy the slightly outdated (but still worthwhile) 2009 version or attempt to build the new edition that Wickham is currently working on.

X things you might learn how to do in R

Most of these things I learned during the process of writing this walkthrough:

- How to make a scatterplot

- How to reduce the effect of overplotting

- How to make a histogram

- How to customize the granularity of your histogram

- How to create a two-tone stacked chart

- How to reverse the stacking order of a two-tone chart

- How to plot a geographical shapefile as a polygon

- How to plot points on a shapefile

- How to specify the projection when plotting geographical data

- How to filter a data frame of coordinates using a shapefile

- How to highlight a single data point on a chart

- How to add value labels to a chart

- How to re-order the labels of a chart’s legend

- How to create a small multiples chart

- How to extract individual state boundaries from a U.S. shapefile

- How to use Google Maps and OpenStreetMap data in your map plots

- How to bin points with hexagons

- How to properly project hexbinned geographical data

- How to draw a trendline

- How to make a pie chart

- How to highlight a single chart within a small multiples chart

- How to use Gill Sans as your default chart font.

Chapter 1: Reading up on Oklahoma’s Earthquakes

http://www.newyorker.com/magazine/2015/04/13/weather-underground

NPR’s StateImpact project has been covering the issue for the past few years

For a 3-minute video primer on the issue, check out this clip by Reveal/The Center for Investigative Reporting:

The cause

In a 2013 article from Mother Jones:

Jean Antonides, vice president of exploration for New Dominion, which operates one of the wells near the Wilzetta Fault. He informed me that people claiming to know the true source of the Oklahoma quakes are “either lying to your face or they’re idiots.”

The industry’s general response is that, yes, earthquakes are happening. But that doesn’t mean it’s their fault. Until recently, they had the backing of the Oklahoma Geological Survey (OGS). In a position statement released in February 2014:

Overall, the majority, but not all, of the recent earthquakes appear to be the result of natural stresses, since they are consistent with the regional Oklahoma natural stress field.

Current drilling activity

The U.S. Geological Survey Earthquake Data

The U.S. Geological Survey (USGS) Earthquake Hazards Program is a comprehensive clearinghouse of earthquake data collected and processed by its vast network of seismic sensors. The Atlantic has a fine article about how (and how fast) the slippage of a fault line, 6 miles underground, is detected by USGS sensor stations and turned into data and news feeds.

The earthquake data that we will use is the most simplified version of USGS data: flat CSV files. While it contains the latitude and longitude of the earthquakes’ epicenters, it does not TKTK

Limitations of the data

The USGS earthquake data is as ideal of a dataset we could hope to work with. We’re not dealing with human voluntary reports of earthquakes, but automated readings from a network of sophisticated sensors. That said, sensors and computers aren’t perfect, as the Los Angeles Times and their Quakebot discovered in May. The USGS has a page devoted to errata in its reports.

Historical reliability of the data

It’s incorrect to think of the USGS data as deriving from a monolithic and unchanging network. For one thing, the USGS partners with universities and industry in operating and gathering data from seismographs. And obviously, technology, and sensors change, as does the funding for installing and operating them. From the USGS “Earthquake Facts and Statistics” page:

The USGS estimates that several million earthquakes occur in the world each year. Many go undetected because they hit remote areas or have very small magnitudes. The NEIC now locates about 50 earthquakes each day, or about 20,000 a year.

As more and more seismographs are installed in the world, more earthquakes can be and have been located. However, the number of large earthquakes (magnitude 6.0 and greater) has stayed relatively constant.

Is it possible that Oklahoma’s recent earthquake swarm in Oklahoma is just the result of the recent installation of a batch of seismographs in Oklahoma? Could be – though I think it’s likely the USGS would have mentioned this in its informational webpages and documentation about the Oklahoma earthquakes. In chapter 3, we’ll try to use some arithmetic and estimation to address the “Oh, it’s only because there’s more sensors” possibility. But to keep things relatively safe and simple, we’ll limit the scope of the historical data to about 20 years: from 1995 to August 2015. The number of sensors and technology may have changed in that span, but not as drastically as they have in even earlier decades. The USGS has a page devoted to describing the source and caveats of its earthquake data.

Getting the data

For quick access, the USGS provides real-time data feeds for time periods between an hour to the last 30 days. The Feeds & Notifications page shows a menu of data formats and services. For our purposes, the Spreadsheet Format option is good enough.

To access the entirety of the USGS data, visit their Archive page.

In Chapter 3, I briefly explain the relatively simple code for automating the collection of this data. The process isn’t that interesting though so if you just want the zipped up data, you can find it in the repo’s /data directory, in the file named usgs-quakes-dump.csv.zip. It weighs in at 27.1MB zipped, 105MB uncompressed.

Data attributes

The most relevant columns in the CSV version of the data:

- time

- latitude and longitude

- mag, i.e. magnitude

- type, as in, type of seismic event, which includes earthquakes, explosions, and quarry.

- place, a description of the geographical region of the seismic event.

Note: a non-trivial part of the USGS data is the measured magnitude of the earthquake. This is not an absolute, set-in-stone number (neither is time, for that matter), and the disparities in measurements of magnitude – including what state and federal agencies report – can be a major issue.

However, for our walkthrough, this disparity is minimized because we will be focused on the frequency and quantity of earthquakes, rather than their magnitude.

The U.S. Census Cartographic Boundary Shapefiles

The USGS data has a place column, but it’s not specific enough to be able to use in assigning earthquakes to U.S. states. So we need geospatial data, specifically, U.S. state boundaries. The U.S. Census has us covered with zipped-up simplified boundary data:

The cartographic boundary files are simplified representations of selected geographic areas from the Census Bureau’s MAF/TIGER geographic database. These boundary files are specifically designed for small scale thematic mapping.

Generalized boundary files are clipped to a simplified version of the U.S. outline. As a result, some off-shore areas may be excluded from the generalized files.

For more details about these files, please see our Cartographic Boundary File Description page.

There are shapefiles for various levels of political boundaries. We’ll use the ones found on the states page. The lowest resolution file – 1:20,000,000, i.e. 1 inch represents 20 million real inches – is adequate for our charting purposes.

The direct link to the Census’s 2014 1:20,000,000 shape file is here. I’ve also included it in this repo’s data/ directory.

The limits of state boundaries

The Census shapefiles are conveniently packaged for the analysis we want to do, though we’ll find out in Chapter 2 that there’s a good amount of complexity in preparing geospatial boundary data for visualization.

But the bigger question is: Are state boundaries really the best way to categorize earthquakes? These boundaries are political and have no correlation with the seismic lines that earthquakes occur along.

To use a quote from the New Yorker, attributed to Austin Holland, then-head seismologist of the Oklahoma Geological Survey:

Someone asked Holland about several earthquakes of greater than 4.0 magnitude which had occurred a few days earlier, across Oklahoma’s northern border, in Kansas. Holland joked, “Well, the earthquakes aren’t stopping at the state line, but my problems do.”

So, even if we assume something man-made is causing the earthquakes, it could very well be drilling in Texas, or any of Oklahoma’s other neighbors, that are causing Oklahoma’s earthquake swarm. And conversely, earthquakes caused by Oklahoma drilling but occur within other states’ borders will be uncounted when doing a strict state-by-state analysis.

Ideally, we would work with the fault map of Oklahoma. For example, the Oklahoma Geological Survey’s Preliminary Fault Map of Oklahoma:

The shapefile for the fault map can be downloaded as a zip file here, and other data files can be found posted at the OGS’s homepage. The reason why I’m not using it for this walkthrough is: it’s complicated. As in, it’s too complicated for me, though I might return to it when I have more time and to update this guide.

But like the lines of political boundaries, the lines of a fault map don’t just exist. They are discovered, often after an earthquake, and some of the data is voluntary reported by the drilling industry. Per the New Yorker’s April 2015 story:

“We know more about the East African Rift than we know about the faults in the basement in Oklahoma.” In seismically quiet places, such as the Midwest, which are distant from the well-known fault lines between tectonic plates, most faults are, instead, cracks within a plate, which are only discovered after an earthquake is triggered. The O.G.S.’s Austin Holland has long had plans to put together two updated fault maps, one using the available published literature on Oklahoma’s faults and another relying on data that, it was hoped, the industry would volunteer; but, to date, no updated maps have been released to the public.

As I’ll cover in chapter 4 of this walkthrough, things have obviously changed since the New Yorker’s story, including the release of updated fault maps.

About the Oklahoma Corporation Commission

The OCC has many relevant datasets from the drilling industry, in particular, spreadsheets of the locations of UIC (underground injection control) wells, i.e. where wastewater from drilling operations is injected into the earth for storage.

The data comes in several Excel files, all of which are mirrored in the data/ directory. The key fields are the locations of the wells and the volume of injection in barrels per month or year.

Unfortunately, the OCC doesn’t seem to have a lot of easily-found documentation for this data. Also unfortunate: this data is essential in attempting to make any useful conclusions about the cause of Oklahoma’s earthquakes. In Chapter 4, I go into significant detail about the OCC data’s limitations, but still fall very short of what’s needed to make the data usable..

Chapter 2: Basic R and Data Visualization Concepts

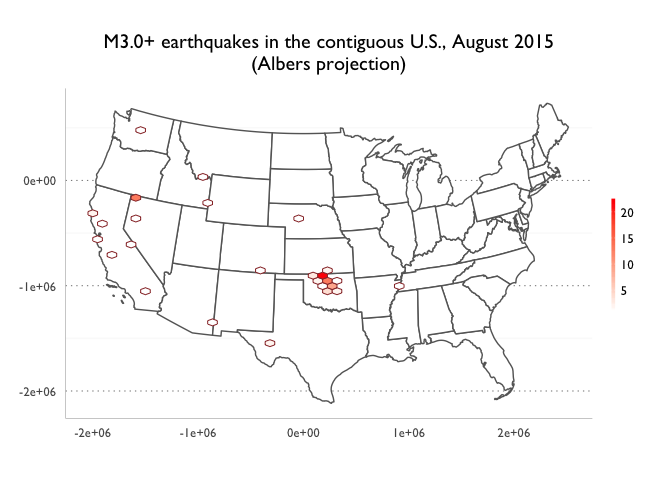

This chapter is focused on R syntax and concepts for working with data frames and generating visualizations via ggplot2. For demonstration purposes, I use a subset of the U.S. Geological Survey’s earthquake data (records for August 2015). Chapters 3 and 4 will repeat the same concepts except with earthquake data spanning back to 1995.

If you’re pretty comfortable with R, you can go ahead and skip to Chapter 3

Loading the libraries and themes

library(ggplot2)

library(scales)

library(grid)

library(dplyr)

library(lubridate)

library(rgdal)

library(hexbin)

library(ggmap)

- ggplot2 is the visualization framework.

- dplyr is a library for manipulating, transforming, and aggregating data frames. It also utilizes the nice piping from the magrittr package, which warms the Unix programmer inside me.

- lubridate - Greatly eases working with time values when generating time series and time-based filters. Think of it as moment.js for R.

- scales - Provides helpers for making scales for our ggplot2 charts.

- grid - contains some functionality that ggplot2 uses for chart styling and arrangement. including the unit() function.

- hexbin - Used with ggplot2 to make hexbin maps.

- rgdal - bindings for geospatial operations, including map projection and the reading of map shapefiles. Can be a bear to install due to a variety of dependencies. If you’re on OS X, I recommend installing Homebrew and running

brew install gdalbefore installing the rgdal package via R. - ggmap - Another ggplot2 plugin that allows the plotting of geospatial data on top of map imagery from Google Maps, OpenStreetMap, and Stamen Maps.

Customizing the themes

This is entirely optional, though it will likely add some annoying roadblocks for everyone trying to follow along. There’s not much to theming in ggplot2: just remember a shit-ton of syntax and have a lot of patience. You can view my ggplot theme script and copy it from here, and then make it locally available to your scripts (e.g. ./myslimthemes.R), so that you can source them as I do in each of my scripts:

source("./myslimthemes.R")

theme_set(theme_dan())

Or, just don’t use them when copying the rest of my code. All of the theme calls begin with theme_dan or dan. One dependency that might cause a few problems is the use of the extrafont package, which I use to set the default font to Gill Sans. I think I set it up so that systems without Gill Sans or extrafont will just throw a warning message. But I didn’t spend too much time testing that.

Downloading the data

For this chapter, we use two data files:

-

A feed of earthquake reports in CSV format from the U.S. Geological Survey. For this section, we’ll start by using the “Past 30 Days - All Earthquakes” feed, which can be downloaded at this URL:

http://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/all_month.csv

-

Cartographic boundary shapefiles for U.S. state boundaries, via the U.S. Census. The listing of state boundaries can be found here. There are several levels of detail; the resolution of

1:20,000,000is good enough for our purposes:http://www2.census.gov/geo/tiger/GENZ2014/shp/cb_2014_us_state_20m.zip

If you’re following along many years from now and the above links no longer work, the Github repo for this walkthrough contains copies of the raw files for you to practice on. You can either just clone this repo to get the files. Or download them from Github’s raw file storage – these URLs are subject to the whims of Github’s framework and may change down the road:

- https://raw.githubusercontent.com/dannguyen/ok-earthquakes-RNotebook/master/data/all_month.csv

- https://raw.githubusercontent.com/dannguyen/ok-earthquakes-RNotebook/master/data/cb_2014_us_state_20m.zip

The easiest thing is to just clone the repo or download and unpack the zip file of this repo.

First we create a data directory to store our files:

dir.create('./data')

Download earthquake data into a data frame

Because the data files can get big, I’ve included an if statement so that if a file exists at ./data/all_month.csv, the download command won’t attempt to re-download the file.

url <- "http://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/all_month.csv"

fname <- "./data/all_month.csv"

if (!file.exists(fname)){

print(paste("Downloading: ", url))

download.file(url, fname)

}

Alternatively, to download a specific interval of data, such as just the earthquakes for August 2015, use the USGS Archives endpoint:

base_url <- 'http://earthquake.usgs.gov/fdsnws/event/1/query.csv?'

starttimeparam <- 'starttime=2015-08-01 00:00:00'

endtime_param <- 'endtime=2015-08-31 23:59:59'

orderby_param <- 'orderby=time-asc'

url <- paste(base_url, starttimeparam, endtime_param, orderby_param, sep = "&")

print(paste("Downloading: ", url))

fname = 'data/2015-08.csv'

download.file(url, fname)

The standard read.csv() function can be used to convert the CSV file into a data frame, which I store into a variable named usgs_data:

usgs_data <- read.csv(fname, stringsAsFactors = FALSE)

Convert the time column to a Date-type object

The time column of usgs_data contains timestamps of the events:

head(usgs_data$time)

## [1] "2015-08-01T00:07:41.000Z" "2015-08-01T00:13:14.660Z"

## [3] "2015-08-01T00:23:01.590Z" "2015-08-01T00:30:14.000Z"

## [5] "2015-08-01T00:30:50.820Z" "2015-08-01T00:43:56.220Z"

However, these values are strings; to work with them as measurements of time, e.g. in creating a time series chart, we convert them to time objects (more specifically, objects of class POSIXct). The lubridate package provides the useful ymd_hms() function for fairly robust parsing of strings.

The standard way to transform a data frame column looks like this:

usgs_data$time <- ymd_hms(usgs_data$time)

However, I’ll more frequently use the mutate() function from the dplyr package:

usgs_data <- mutate(usgs_data, time = ymd_hms(time))

And because dplyr makes use of the piping convention from the magrittr package, I’ll often write the above snippet like this:

usgs_data <- usgs_data %>% mutate(time = ymd_hms(time))

Download and read the map data

Let’s download the U.S. state boundary data. This also comes in a zip file that we download and unpack:

url <- "http://www2.census.gov/geo/tiger/GENZ2014/shp/cb_2014_us_state_20m.zip"

fname <- "./data/cb_2014_us_state_20m.zip"

if (!file.exists(fname)){

print(paste("Downloading: ", url))

download.file(url, fname)

}

unzip(fname, exdir = "./data/shp")

The unzip command unpacks a list of files into the data/shp/ subdirectory. We only care about the one named cb_2014_us_state_20m.shp. To read that file into a SpatialPolygonsDataFrame-type object, we use the rgdal library’s readOGR() command and assign the result to the variable us_map:

us_map <- readOGR("./data/shp/cb_2014_us_state_20m.shp", "cb_2014_us_state_20m")

At this point, we’ve created two variables, usgs_data and us_map, which point to data frames for the earthquakes and the U.S. state boundaries, respectively. We’ll be building upon these data frames, or more specifically, creating subsets of their data for our analyses and visualizations.

Let’s count how many earthquake records there are in a month’s worth of USGS earthquake data:

nrow(usgs_data)

## [1] 8826

That’s a nice big number with almost no meaning. We want to know how big these earthquakes were, where they hit, and how many of the big ones hit. To go beyond this summary number, we turn to data visualization.

A quick introduction to simple charts in ggplot2

The earthquake data contains several interesting, independent dimensions: location (e.g. latitude and longitude), magnitude, and time. A scatterplot is frequently used to show the relationship between two or more of a dataset’s variables. In fact, we can think of geographic maps as a sort of scatterplot showing longitude and latitude along the x- and y-axis, though maps don’t imply that there’s a “relationship”, per se, between the two coordinates.

We’ll cover mapping later in this chapter, so let’s start off by plotting the earthquake data with time as the indepdendent variable, i.e. along the x-axis, and mag as the dependent variable, i.e. along the y-axis.

ggplot(usgs_data, aes(x = time, y = mag)) +

geom_point() +

scale_x_datetime() +

ggtitle("Worldwide seismic events for August 2015")

First of all, from a conceptual standpoint, one might argue that a scatterplot doesn’t much sense here, because how big an earthquake is seems to be totally unrelated to when it happens. This is not quite true, as weaker earthquakes can presage or closely follow – i.e. aftershocks – a massive earthquake.

But such an insight is lost in our current attempt because we’re attempting to plot the entire world’s earthquakes on a single plot. This ends up being impractical. There are so many earthquake events that we run into the problem of overplotting: Dots representing earthquakes of similar magnitude and time will overlap and obscure each other.

One way to fix this is to change the shape of the dots and increase their transparency, i.e. decrease their alpha:

ggplot(usgs_data, aes(x = time, y = mag)) +

geom_point(alpha = 0.2) +

scale_x_datetime() +

ggtitle("Worldwide seismic events for August 2015")

That’s a little better, but there are just too many points for us to visually process. For example, we can vaguely tell that there seem to be more earthquakes of magnitudes between 0 and 2. But we can’t accurately quantify the difference.

That’s a little better, but there are just too many points for us to visually process. For example, we can vaguely tell that there seem to be more earthquakes of magnitudes between 0 and 2. But we can’t accurately quantify the difference.

Fun with histograms

Having too much data to sensibly plot is going to be a major and recurring theme for the entirety of this walkthrough. In chapter 3, our general strategy will be to divide and filter the earthquake records by location, so that not all of the points are plotted in a single chart.

However, sometimes it’s best to just change the story you want to tell. With a scatterplot, we attempted (and failed) to show intelligible patterns in a month’s worth of worldwide earthquake activity. Let’s go simpler. Let’s attempt to just show what kinds of earthquakes, and how many, have occurred around the world in a month’s timespan.

A bar chart (histogram) is the most straightforward way to show how many earthquakes fall within a magnitude range.

Below, I simply organize the earthquakes into “buckets” by rounding them to the nearest integer:

ggplot(usgs_data, aes(x = round(mag))) +

geom_histogram(binwidth = 1)

The same chart, but with some polishing of its style. The color parameter for geom_histogram() allows us to create an outline to visually separate the bars:

ggplot(usgs_data, aes(x = round(mag))) +

geom_histogram(binwidth = 1, color = "white") +

# this removes the margin between the plot and the chart's bottom, and

# formats the y-axis labels into something more human readable:

scale_y_continuous(expand = c(0, 0), labels = comma) +

ggtitle("Worldwide earthquakes by magnitude, rounded to nearest integer\nAugust 2015")

The tradeoff for the histogram’s clarity is a loss in granularity. In some situations, it’s important to see the characteristics of the data at a more granular level.

The geom_histogram()’s binwidth parameter can do the work of rounding and grouping the values. The following snippet groups earthquakes the nearest tenth of a magnitude number. The size parameter in geom_histogram() controls the width of each bar’s outline:

ggplot(usgs_data, aes(x = mag)) +

geom_histogram(binwidth = 0.1, color = "white", size = 0.2) +

scale_x_continuous(breaks = pretty_breaks()) +

scale_y_continuous(expand = c(0, 0)) +

ggtitle("Worldwide earthquakes grouped by tenths of magnitude number\nAugust 2015")

Time series

Another tradeoff between histogram and scatterplot is that the latter displayed two facets of the data: magnitude and time. With a histogram, we can only show one or the other.

A histogram in which the count of records is grouped according to their time element is more commonly known as a time series. Here’s how to chart the August 2015 earthquakes by day, which we derive from the time column using lubridate’s day() function:

ggplot(usgs_data, aes(x = day(time))) +

geom_histogram(binwidth = 1, color = "white") +

scale_x_continuous(breaks = pretty_breaks()) +

scale_y_continuous(expand = c(0, 0)) +

ggtitle("Worldwide earthquakes grouped by tenths of magnitude number\nAugust 2015")

Stacked charts

We do have several options for showing two or more characteristics of a dataset as a bar chart. By setting the fill aesthetic of the data – in this case, to the mag column – we can see the breakdown of earthquakes by magnitude for each day:

ggplot(usgs_data, aes(x = day(time), fill = factor(round(mag)))) +

geom_histogram(binwidth = 1, color = "white") +

scale_x_continuous(breaks = pretty_breaks()) +

scale_y_continuous(expand = c(0, 0)) +

ggtitle("Worldwide earthquakes grouped by day\nAugust 2015")

Let’s be a little nitpicky and rearrange the legend so that order of the labels follows the same order of how the bars are stacked:

ggplot(usgs_data, aes(x = day(time), fill = factor(round(mag)))) +

geom_histogram(binwidth = 1, color = "white") +

scale_x_continuous(breaks = pretty_breaks()) +

scale_y_continuous(expand = c(0, 0)) +

guides(fill = guide_legend(reverse = TRUE))

ggtitle("Worldwide earthquakes grouped by day and magnitude\nAugust 2015")

## $title

## [1] "Worldwide earthquakes grouped by day and magnitude\nAugust 2015"

##

## attr(,"class")

## [1] "labels"

Limit the color choices

Before we move on from the humble histogram/time series, let’s address how unnecessarily busy the previous chart is. There are 9 gradations of color. Most of them, particularly at the extremes of the scale, are barely even visible because of how few earthquakes are in that magnitude range.

Is it worthwhile to devote so much space on the legend to M6.0+ earthquakes when in reality, so few earthquakes are in that range? Sure, you might say: those are by far the deadliest of the earthquakes. While that is true, the purpose of this chart is not to notify readers how many times their lives may have been rocked by a huge earthquake in the past 30 days – a histogram a very poor way to convey such information, period. Furthermore, the reality of the chart’s visual constraints make it next to impossible to even see those earthquakes on the chart.

So let’s simplify the color range by making an editorial decision: earthquakes below M3.0 aren’t terribly interesting. At M3.0+, they have the possibility of being notable depending on where they happen. Since the histogram already completely fails at showing location of the earthquakes, we don’t need to feel too guilty that our simplified, two-color chart obscures the quantity of danger from August 2015’s earthquakes:

ggplot(usgs_data, aes(x = day(time), fill = mag > 3)) +

geom_histogram(binwidth = 1, color = "black", size = 0.2) +

scale_x_continuous(breaks = pretty_breaks()) +

scale_y_continuous(expand = c(0, 0)) +

scale_fill_manual(values = c("#fee5d9", "#de2d26"), labels = c("M3.0 >", "M3.0+")) +

guides(fill = guide_legend(reverse = TRUE)) +

ggtitle("Worldwide earthquakes grouped by day and magnitude\nAugust 2015")

You probably knew that the majority of earthquakes were below M3.0. But did you underestimate how much they were in the majority? The two-tone chart makes the disparity much clearer.

One more nitpicky design detail: the number of M3.0+ earthquakes, e.g. the red-colored bars, are more interesting than the number of weak earthquakes. But the jagged shape of the histogram makes it harder to count the M3.0+ earthquakes. So let’s reverse the order of the stacking (and the legend). The easieset way to do that is to change the “phrasing” of the conditional statement for the fill aesthetic, from mag > 3 to mag < 3:

ggplot(usgs_data, aes(x = day(time), fill = mag < 3)) +

geom_histogram(binwidth = 1, color = "black", size = 0.2) +

scale_x_continuous(breaks = pretty_breaks()) +

scale_y_continuous(expand = c(0, 0)) +

scale_fill_manual(values = c("#de2d26", "#fee5d9"), labels = c("M3.0+", "M3.0 >")) +

guides(fill = guide_legend(reverse = TRUE)) +

ggtitle("Worldwide earthquakes grouped by day and magnitude\nAugust 2015")

It’s the story, stupid

Though it’s easy to get caught up in the technical details of how to build and design a chart, remember the preeminent role of editorial insight and judgment. The details you choose to focus on and the story you choose to tell have the greatest impact on the success of your data visualizations.

Filtering the USGS data

TKTK

The USGS data feed contains more than just earthquakes, though. So we use dplyr’s group_by() function on the type column and then summarise() the record counts:

usgs_data %>% group_by(type) %>% summarise(count = n())

## Source: local data frame [3 x 2]

##

## type count

## 1 earthquake 8675

## 2 explosion 43

## 3 quarry blast 108

For this particular journalstic endeavour, we don’t care about explosions and quarry blasts. We also only care about events of a reasonable magnitude – remember that magnitudes under 3.0 are often not even noticed by the average person. Likewise, most of the stories about Oklahoma’s earthquakes focus on earthquakes of magnitude of at least 3.0, so let’s filter usgs_data appropriately and store the result in a variable named quakes:

There are several ways to create a subset of a data frame:

-

Using bracket notation:

quakes <- usgs_data[usgs_data$mag >= 3.0 & usgs_data$type == 'earthquake',] -

Using

subset():quakes <- subset(usgs_data, usgs_data$mag >= 3.0 & usgs_data$type == 'earthquake') -

And my preferred convention: using dplyr’s

filter():

quakes <- usgs_data %>% filter(mag >= 3.0, type == 'earthquake')

The quakes dataframe is now about a tenth the size of the data we original downloaded, which will work just fine for our purposes:

nrow(quakes)

## [1] 976

Plotting the earthquake data without a map

A geographical map can be thought of a plain ol’ scatterplot. In this case, each dot is plotted using the longitude and latitude values, which serve as the x and y coordinates, respectively:

ggplot(quakes, aes(x = longitude, y = latitude)) + geom_point() +

ggtitle("M3.0+ earthquakes worldwide, August 2015")

Even without the world map boundaries, we can see in the locations of the earthquakes a rough outline of the world’s fault lines. Compare the earthquakes plot to this Digital Tectonic Activity Map from NASA:

Even with just ~1,000 points – and, in the next chapter, 20,000+ points, we again run into a problem of overplotting, so I’ll increase the size and transparency of each point and change the point shape. And just to add some variety, I’ll change the color of the points from black to firebrick:

We can apply these styles in the geom_point() call:

ggplot(quakes, aes(x = longitude, y = latitude)) +

geom_point(size = 3,

alpha = 0.2,

shape = 1,

color = 'firebrick') +

ggtitle("M3.0+ earthquakes worldwide, August 2015")

Varying the size by magnitude

Obviously, some earthquakes are more momentous than others. An easy way to show this would be to vary the size of the point by mag:

ggplot(quakes, aes(x = longitude, y = latitude)) +

geom_point(aes(size = mag),

shape = 1,

color = 'firebrick')

However, this understates the difference between earthquakes, as their magnitudes are measured on a logarithmic scale; a M5.0 earthquake has 100 times the amplitude of a M3.0 earthquake. Scaling the circles accurately and fitting them on the map would be…a little awkward (and I also don’t know enough about ggplot to map the legend’s labels to the proper non-transformed values). In any case, for the purposes of this investigation, we mostly care about the frequency of earthquakes, rather than their actual magnitudes, so I’ll leave out the size aesthetic in my examples.

Let’s move on to plotting the boundaries of the United States.

Plotting the map boundaries

The data contained in the us_map variable is actually a kind of data frame, a SpatialPolygonsDataFrame, which is provided to us as part of the sp package, which was included via the rgdal package.

Since us_map is a data frame, it’s pretty easy to plop it right into ggplot():

ggplot() +

geom_polygon(data = us_map, aes(x = long, y = lat, group = group)) +

theme_dan_map()

By default, things look a little distorted because the longitude and latitude values are treated as values on a flat, 2-dimensional plane. As we’ve learned, the world is not flat. So to have the geographical points – in this case, the polygons that make up state boundaries – look more like we’re accustomed to on a globe, we have to project the longitude/latitude values to a different coordinate system.

This is a fundamental cartographic concept that is beyond my ability to concisely and intelligibly explain here, so I direct you to Michael Corey, of the Center for Investigative Reporting, and his explainer, “Choosing the Right Map Projection”. And Mike Bostock has a series of excellent interactive examples showing some of the complexities of map projection; I embed one of his D3 examples below:

Once you understand map projections, or at least are aware of their existence, applying them to ggplot() is straightforward. In the snippet below, I apply the Albers projection, which is the standard projection for the U.S. Census (and Geological Survey) using the coord_map() function. Projecting in Albers requires a couple of parameters that I’m just going to copy and modify from this r-bloggers example, though I assume it has something to do with specifying the parallels needed for accurate proportions:

ggplot() +

geom_polygon(data = us_map, aes(x = long, y = lat, group = group)) +

coord_map("albers", lat0 = 38, latl = 42) + theme_classic()

Filtering out Alaska and Hawaii

The U.S. Census boundaries only contains data for the United States. So why does our map span the entire globe? Because Alaska has the annoying property of being both the most western and eastern point of the United States, such that it wraps around to the other side of our coordinate system, i.e. from longitude -179 to 179.

There’s obviously a more graceful, mathematically-proper way of translating the coordinates so that everything fits nicely on our chart. But for now, to keep things simple, let’s just remove Alaska – and Hawaii, and all the non-states – as that gives us an opportunity to practice filtering SpatialPolygonsDataFrames.

First, we inspect the column names of us_map’s data to see which one corresponds to the name of each polygon, e.g. Iowa or CA:

colnames(us_map@data)

## [1] "STATEFP" "STATENS" "AFFGEOID" "GEOID" "STUSPS" "NAME"

## [7] "LSAD" "ALAND" "AWATER"

Both NAME and STUSPS (which I’m guessing stands for U.S. Postal Service) will work:

head(select(us_map@data, NAME, STUSPS))

## NAME STUSPS

## 0 California CA

## 1 District of Columbia DC

## 2 Florida FL

## 3 Georgia GA

## 4 Idaho ID

## 5 Illinois IL

To filter out the data that corresponds to Alaska, i.e. STUSPS == "AK":

byebye_alaska <- us_map[us_map$STUSPS != 'AK',]

To filter out Alaska, Hawaii, and the non-states, e.g. Guam and Washington D.C., and then assign the result to the variable usc_map:

x_states <- c('AK', 'HI', 'AS', 'DC', 'GU', 'MP', 'PR', 'VI')

usc_map <- subset(us_map, !(us_map$STUSPS %in% x_states))

Now let’s map the contiguous United States, and, while we’re here, let’s change the style of the map to be in dark outline with white fill:

ggplot() +

geom_polygon(data = usc_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.1) +

coord_map("albers", lat0 = 38, latl = 42)

Plotting the quakes on the map

Plotting the earthquake data on top of the United States map is as easy as adding two layers together; notice how I plot the map boundaries before the points, or else the map (or rather, its white fill) will cover up the earthquake points:

ggplot(quakes, aes(x = longitude, y = latitude)) +

geom_polygon(data = usc_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.1) +

geom_point(size = 3,

alpha = 0.2,

shape = 4,

color = 'firebrick') +

coord_map("albers", lat0 = 38, latl = 42) +

theme_dan_map()

Well, that turned out poorly. The viewpoint reverts to showing the entire globe. The problem is easy to understand: the plot has to account for both the United States boundary data and the worldwide locations of the earthquakes.

In the next section, we’ll tackle this problem by converting the earthquakes data into its own spatial-aware data frame. Then we’ll cross-reference it with the data in usc_map to remove earthquakes that don’t originate from within the boundaries of the contiguous United States.

Working with and filtering spatial data points

To reiterate, usc_map is a SpatialPolygonsDataFrame, and quakes is a plain data frame. We want to use the geodata in usc_map to remove all earthquake records that don’t take place within the boundaries of the U.S. contiguous states.

The first question to ask is: why don’t we just filter quakes by one of its columns, like we did for mag and type? The problem is that while the USGS data has a place column, it is not U.S.-centric, i.e. there’s not an easy way to say, “Just show me records that take place within the United States”, because place doesn’t always mention the country:

head(quakes$place)

## [1] "56km SE of Ofunato, Japan" "287km N of Ndoi Island, Fiji"

## [3] "56km NNE of Port-Olry, Vanuatu" "112km NNE of Vieques, Puerto Rico"

## [5] "92km SSW of Nikolski, Alaska" "53km NNW of Chongdui, China"

So instead, we use the latitude/longitude coordinates stored in usc_map to filter out earthquakes by their latitude/longitude values. The math to do this from scratch is quite…labor intensive. Luckily, the sp library can do this work for us, we just have to first convert the quakes data frame into one of sp’s special data frames: a SpatialPointsDataFrame.

sp_quakes <- SpatialPointsDataFrame(data = quakes,

coords = quakes[,c("longitude", "latitude")])

Then we assign it the same projection as us_map (note that usc_map also has this same projection). First let’s inspect the actual projection of us_map:

us_map@proj4string

## CRS arguments:

## +proj=longlat +datum=NAD83 +no_defs +ellps=GRS80 +towgs84=0,0,0

Now assign that value to sp_quakes (which, by default, has a proj4string attribute of NA):

sp_quakes@proj4string <- us_map@proj4string

Let’s see what the map plot looks like. Note that in the snippet below, I don’t use sp_quakes as the data set, but as.data.frame(sp_quakes). This conversion is necessary as ggplot2 doesn’t know how to deal with the SpatialPointsDataFrame (and yet it does fine with SpatialPolygonsDataFrames…whatever…):

ggplot(as.data.frame(sp_quakes), aes(x = longitude, y = latitude)) +

geom_polygon(data = usc_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.1) +

geom_point(size = 3,

alpha = 0.2,

shape = 4,

color = 'firebrick') +

coord_map("albers", lat0 = 38, latl = 42) +

theme_dan_map()

No real change…we’ve only gone through the process of making a spatial points data frame. Creating that spatial data frame, then converting it back to a data frame to use in ggplot() has basically no effect – though it would if the geospatial data in usc_map had a projection that significantly transformed its lat/long coordinates.

How to subset a spatial points data frame

To see the change that we want – just earthquakes in the contiguous United States – we subset the spatial points data frame, i.e. sp_quakes, using usc_map. This is actually quite easy, and uses similar notation as when subsetting a plain data frame. I actually don’t know enough about basic R notation and S4 objects to know or explain why this works, but it does:

sp_usc_quakes <- sp_quakes[usc_map,]

usc_quakes <- as.data.frame(sp_usc_quakes)

ggplot(usc_quakes, aes(x = longitude, y = latitude)) +

geom_polygon(data = usc_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.1) +

geom_point(size = 3,

alpha = 0.5,

shape = 4,

color = 'firebrick') +

coord_map("albers", lat0 = 38, latl = 42) +

ggtitle("M3.0+ earthquakes in the contiguous U.S. during August 2015") +

theme_dan_map()

Subsetting points by state

What if we want to just show earthquakes in California? We first subset usc_map:

ca_map <- usc_map[usc_map$STUSPS == 'CA',]

Then we use ca_map to filter sp_quakes:

ca_quakes <- as.data.frame(sp_quakes[ca_map,])

Mapping California and its quakes:

ggplot(ca_quakes, aes(x = longitude, y = latitude)) +

geom_polygon(data = ca_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.3) +

geom_point(size = 3,

alpha = 0.8,

shape = 4,

color = 'firebrick') +

coord_map("albers", lat0 = 38, latl = 42) +

theme_dan_map() +

ggtitle("M3.0+ earthquakes in California during August 2015")

Joining shape file attributes to a data frame

The process of subsetting usc_map for each state, then subsetting the sp_quakes data frame, is a little cumbersome. Another approach is to add a new column to the earthquakes data frame that specifies which state the earthquake was in.

As I mentioned previously, the USGS data has a place column, but it doesn’t follow a structured taxonomy of geographical labels and serves primarily as a human-friendly label, e.g. "South of the Fiji Islands" and "Northern Mid-Atlantic Ridge".

So let’s add the STUSPS column to sp_quakes. First, since we’re done mapping just the contiguous United States, let’s create a map that includes all the 50 U.S. states and store it in the variable states_map:

x_states <- c('AS', 'DC', 'GU', 'MP', 'PR', 'VI')

states_map <- subset(us_map, !(us_map$STUSPS %in% x_states))

The sp package’s over() function can be used to join the rows of sp_quakes to the STUSPS column of states_map. In other words, the resulting data frame of earthquakes will have a STUSPS column, and the quakes in which the geospatial coordinates overlap with polygons in states_map will have a value in it, e.g. "OK", "CA":

xdf <- over(sp_quakes, states_map[, 'STUSPS'])

Most of the STUSPS values in xdf will be <NA> because, most of the earthquakes do not take place in the United States. Though we see of all the United States, Oklahoma (i.e. OK) has experienced the most M3.0+ earthquakes by far in August 2015, twice as many as Alaska:

xdf %>% group_by(STUSPS) %>%

summarize(count = n()) %>%

arrange(desc(count))

## Source: local data frame [15 x 2]

##

## STUSPS count

## 1 NA 858

## 2 OK 55

## 3 AK 27

## 4 NV 14

## 5 CA 7

## 6 KS 4

## 7 HI 2

## 8 TX 2

## 9 AZ 1

## 10 CO 1

## 11 ID 1

## 12 MT 1

## 13 NE 1

## 14 TN 1

## 15 WA 1

To get a data frame of U.S.-only quakes, we combine xdf with sp_quakes. We then filter the resulting data frame by removing all rows in which STUSPS is <NA>:

ydf <- cbind(sp_quakes, xdf) %>% filter(!is.na(STUSPS))

states_quakes <- as.data.frame(ydf)

In later examples, I’ll plot just the contiguous United States, so I’m going to remake the usc_quakes data frame in the same fashion as states_quakes:

usc_map <- subset(states_map, !states_map$STUSPS %in% c('AK', 'HI'))

xdf <- over(sp_quakes, usc_map[, 'STUSPS'])

usc_quakes <- cbind(sp_quakes, xdf) %>% filter(!is.na(STUSPS))

usc_quakes <- as.data.frame(usc_quakes)

So how is this different than before, when we derived usc_quakes?

old_usc_quakes <- as.data.frame(sp_quakes[usc_map,])

Again, the difference in our latest approach is that the resulting data frame, in this case the new usc_quakes and states_quakes, has a STUSPS column:

head(select(states_quakes, place, STUSPS))

## place STUSPS

## 1 119km ENE of Circle, Alaska AK

## 2 73km ESE of Lakeview, Oregon NV

## 3 66km ESE of Lakeview, Oregon NV

## 4 18km SSW of Medford, Oklahoma OK

## 5 19km NW of Anchorage, Alaska AK

## 6 67km ESE of Lakeview, Oregon NV

The STUSPS column makes it possible to do aggregates of the earthquakes dataframe by STUSPS. Note that states with 0 earthquakes for August 2015 are omitted:

ggplot(states_quakes, aes(STUSPS)) + geom_histogram(binwidth = 1) +

scale_y_continuous(expand = c(0, 0)) +

ggtitle("M3.0+ earthquakes in the United States, August 2015")

Layering and arranging ggplot visuals

In successive examples, I’ll be using a few more ggplot tricks and features to add a little more narrative and clarity to the basic data visualizations.

Highlighting and annotating data

To highlight the Oklahoma data in orange, I simply add another layer via geom_histogram(), except with data filtered for just Oklahoma:

ok_quakes <- states_quakes[states_quakes$STUSPS == 'OK',]

ggplot(states_quakes, aes(STUSPS)) +

geom_histogram(binwidth = 1) +

geom_histogram(data = ok_quakes, binwidth = 1, fill = '#FF6600') +

ggtitle("M3.0+ earthquakes in the United States, August 2015")

We can add labels to the bars with a stat_bin() call:

ggplot(states_quakes, aes(STUSPS)) +

geom_histogram(binwidth = 1) +

geom_histogram(data = ok_quakes, binwidth = 1, fill = '#FF6600') +

stat_bin(binwidth=1, geom="text", aes(label = ..count..), vjust = -0.5) +

scale_y_continuous(expand = c(0, 0), limits = c(0, 60)) +

ggtitle("M3.0+ earthquakes in the United States, August 2015")

Highlighting Oklahoma in orange on the state map also involves just making another layer call:

ok_map <- usc_map[usc_map$STUSPS == 'OK',]

ggplot(usc_quakes, aes(x = longitude, y = latitude)) +

geom_polygon(data = usc_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.1) +

geom_polygon(data = ok_map, aes(x = long, y = lat, group = group),

fill = "#FAF2EA", color = "#FF6600", size = 0.4) +

geom_point(size = 2,

alpha = 0.5,

shape = 4,

color = 'firebrick') +

coord_map("albers", lat0 = 38, latl = 42) +

ggtitle("M3.0+ earthquakes in the United States, August 2015") +

theme_dan_map()

Time series

These are not too different from the previous histogram examples, except that the x-aesthetic is set to some function of the earthquake data frame’s time column. We use the lubridate package, specifically floor_date() to bin the records to the nearest hour. Then we use scale_x_date() to scale the x-axis accordingly; the date_breaks() and date_format() functions come from the scales package.

For example, using the states_quakes data frame, here’s a time series showing earthquakes by day of the month-long earthquake activity in the United States:

ggplot(states_quakes, aes(x = floor_date(as.Date(time), 'day'))) +

geom_histogram(binwidth = 1) +

scale_x_date(breaks = date_breaks("week"), labels = date_format("%m/%d")) +

scale_y_continuous(expand = c(0, 0), breaks = pretty_breaks()) +

ggtitle("Daily counts of M3.0+ earthquakes in the United States, August 2015")

To create a stacked time series, in which Oklahoma’s portion of earthquakes is in orange, it’s just a matter of adding another layer as before:

ggplot(states_quakes, aes(x = floor_date(as.Date(time), 'day'))) +

geom_histogram(binwidth = 1, fill = "gray") +

geom_histogram(data = filter(states_quakes, STUSPS == 'OK'), fill = "#FF6600", binwidth = 1) +

scale_y_continuous(expand = c(0, 0), breaks = pretty_breaks()) +

scale_x_date(breaks = date_breaks("week"), labels = date_format("%m/%d")) +

ggtitle("Daily counts of M3.0+ earthquakes, August 2015\nOklahoma vs. all other U.S.")

Or, alternatively, we can set the fill aesthetic to the STUSPS column; I use the guides() and scale_fill_manual() functions to order the colors and labels as I want them to be:

ggplot(states_quakes, aes(x = floor_date(as.Date(time), 'day'),

fill = STUSPS != 'OK')) +

geom_histogram(binwidth = 1) +

scale_x_date(breaks = date_breaks("week"), labels = date_format("%m/%d")) +

scale_fill_manual(values = c("#FF6600", "gray"), labels = c("OK", "Not OK")) +

guides(fill = guide_legend(reverse = TRUE)) +

ggtitle("Daily counts of M3.0+ earthquakes, August 2015\nOklahoma vs. all other U.S.")

Small multiples

ggplot2’s facet_wrap() function provides a convenient way to generate a grid of visualizations, one for each value of a variable, i.e. Tufte’s “small multiples”, or, lattice/trellis charts:

ggplot(data = mutate(usc_quakes, week = floor_date(time, "week")),

aes(x = longitude, y = latitude)) +

geom_polygon(data = usc_map, aes(x = long, y = lat, group = group),

fill = "white", color = "#444444", size = 0.1) +

geom_point(size = 1,

alpha = 0.5,

shape = 1,

color = 'firebrick') +

coord_map("albers", lat0 = 38, latl = 42) +

facet_wrap(~ week, ncol = 2) +

ggtitle("M3.0+ Earthquakes in the contiguous U.S. by week, August 2015") +

theme_dan_map()

Hexbins

Dot map

ggplot() +

geom_polygon(data = ok_map,

aes(x = long, y = lat, group = group),

fill = "white", color = "#666666", size = 0.5) +

geom_point(data = ok_quakes,

aes(x = longitude, y = latitude), color = "red", alpha = 0.4, shape = 4, size = 2.5) +

coord_equal() +

ggtitle("M3.0+ earthquakes in Oklahoma, August 2015") +

theme_dan_map()

Hexbin without projection, Oklahoma

ggplot() +

geom_polygon(data = ok_map,

aes(x = long, y = lat, group = group),

fill = "white", color = "#666666", size = 0.5) +

stat_binhex(data = ok_quakes, bins = 20,

aes(x = longitude, y = latitude), color = "#999999") +

scale_fill_gradientn(colours = c("white", "red")) +

coord_equal() +

ggtitle("M3.0+ earthquakes in Oklahoma, August 2015 (Hexbin)") +

theme_dan_map()

When viewing the entire contiguous U.S., the shortcomings of the standard projection are more obvious. I’ve left on the x- and y-axis so that you can see the actual values of the longitude and latitude columns and then compare them to the Albers-projected values in the next example:

ggplot() +

geom_polygon(data = usc_map,

aes(x = long, y = lat, group = group),

fill = "white", color = "#666666", size = 0.5) +

stat_binhex(data = usc_quakes, bins = 50,

aes(x = longitude, y = latitude), color = "#999999") +

scale_fill_gradientn(colours = c("white", "red")) +

coord_equal() +

ggtitle("M3.0+ earthquakes in the contiguous U.S., August 2015")

Projecting hexbins onto Albers

We can’t rely on the coord_map() function to neatly project the coordinate system to the Albers system, because stat_binhex() needs to do its binning on the non-translated longitude/latitude. If that poor explanation makes no sense to you, that’s OK, it barely makes sense to me.

The upshot is that if we want the visually-pleasing Albers projection for our hexbinned-map, we need to apply the projection to the data frames before we try to plot them.

We use the spTransform() function to apply the Albers coordinate system to usc_map and usc_quakes:

# store the Albers system in a variable as we need to apply it separately

# to usc_map and usc_quakes

albers_crs <- CRS("+proj=laea +lat_0=45 +lon_0=-100 +x_0=0 +y_0=0 +a=6370997 +b=6370997 +units=m +no_defs")

# turn usc_quakes into a SpatialPointsDataFrame

sp_usc <- SpatialPointsDataFrame(data = usc_quakes,

coords = usc_quakes[,c("longitude", "latitude")])

# give it the same default projection as usc_map

sp_usc@proj4string <- usc_map@proj4string

# Apply the Albers projection to the spatial quakes data and convert it

# back to a data frame. Note that the Albers coordinates are stored in

# latitude.2 and longitude.2

albers_quakes <- as.data.frame(spTransform(sp_usc, albers_crs))

# Apply the Albers projection to usc_map

albers_map <- spTransform(usc_map, albers_crs)

Note: in the x and y aesthetic for the stat_binhex() call, we refer to longitude.2 and latitude.2. This is because albers_quakes is the result of two SpaitalPointsDataFrame conversions; each conversion creates a new longitude and latitude column.

After those extra steps, we can now hexbin our earthquake data on the Albers coordinate system; again, this is purely an aesthetic fix. As in the previous example, I’ve left on the x- and y-axis so you can see how the range of values that the Albers projection maps to:

ggplot() +

geom_polygon(data = albers_map,

aes(x = long, y = lat, group = group),

fill = "white", color = "#666666", size = 0.5) +

stat_binhex(data = as.data.frame(albers_quakes), bins = 50,

aes(x = longitude.2, y = latitude.2), color = "#993333") +

scale_fill_gradientn(colours = c("white", "red")) +

coord_equal() +

ggtitle("M3.0+ earthquakes in the contiguous U.S., August 2015\n(Albers projection)")

Google Satellite Maps

In lieu of using shapefiles that contain geological features:

goog_map <- get_googlemap(center = "Oklahoma", size = c(500,500), zoom = 6, scale = 2, maptype = 'terrain')

We use ggmap() to draw it as a plot:

ggmap(goog_map) + theme_dan_map()

Use Google feature styles to remove administrative labels:

goog_map2 <- get_googlemap(center = "Oklahoma", size = c(500,500),

zoom = 6, scale = 2, maptype = 'terrain',

style = c(feature = "administrative.province", element = "labels", visibility = "off"))

# plot the map

ggmap(goog_map2) + theme_dan_map()

With points

ggmap(goog_map2, extent = 'panel') +

geom_point(data = states_quakes, aes(x = longitude, y = latitude), alpha = 0.2, shape = 4, colour = "red") + theme_dan_map()

With hexbin and satellite:

With hexbin, note that we don’t use Albers projection:

hybrid_goog_map <- get_googlemap(center = "Oklahoma", size = c(640,640), zoom = 6, scale = 2, maptype = 'hybrid',

style = c(feature = "administrative.province", element = "labels", visibility = "off"))

ggmap(hybrid_goog_map, extent = 'panel') +

stat_binhex(data = states_quakes, aes(x = longitude, y = latitude), bins = 30, alpha = 0.3 ) +

scale_fill_gradientn(colours=c("pink","red")) +

guides(alpha = FALSE) + theme_dan_map()

At this point, we’ve covered just the general range of the data-munging and visualization techniques we need to effectively analyze and visualize the historical earthquake data for the United States.

Chapter 3: Exploring Earthquake Data from 1995 to 2015

Questions to ask:

- What has Oklahoma’s earthquake activity been historically?

- Just how significant is the recent swarm of earthquakes compared to the rest of Oklahoma’s history?

- Are there any other states experiencing an upswing in earthquake activity?

Setup

# Load libraries

library(ggplot2)

library(scales)

library(grid)

library(dplyr)

library(lubridate)

library(rgdal)

# load my themes:

source("./myslimthemes.R")

theme_set(theme_dan())

# create a data directory

dir.create('./data')

## Warning in dir.create("./data"): './data' already exists

Download the map data as before:

fname <- "./data/cb_2014_us_state_20m.zip"

if (!file.exists(fname)){

url <- "http://www2.census.gov/geo/tiger/GENZ2014/shp/cb_2014_us_state_20m.zip"

print(paste("Downloading: ", url))

download.file(url, fname)

}

unzip(fname, exdir = "./data/shp")

Load the map data and make two versions of it: - states_map removes all non-U.S. states, such as Washington D.C. and Guam: - cg_map removes all non-contiguous states, e.g. Alaska and Hawaii.

us_map <- readOGR("./data/shp/cb_2014_us_state_20m.shp", "cb_2014_us_state_20m")

## OGR data source with driver: ESRI Shapefile

## Source: "./data/shp/cb_2014_us_state_20m.shp", layer: "cb_2014_us_state_20m"

## with 52 features

## It has 9 fields

## Warning in readOGR("./data/shp/cb_2014_us_state_20m.shp",

## "cb_2014_us_state_20m"): Z-dimension discarded

states_map <- us_map[!us_map$STUSPS %in%

c('AS', 'DC', 'GU', 'MP', 'PR', 'VI'),]

We won’t be mapping Alaska but including it is critical for analysis.

Download the historical quake data

TK explanation.

fn <- './data/usgs-quakes-dump.csv'

zname <- paste(fn, 'zip', sep = '.')

if (!file.exists(zname) || file.size(zname) < 2048){

url <- paste("https://github.com/dannguyen/ok-earthquakes-RNotebook",

"raw/master/data", zname, sep = '/')

print(paste("Downloading: ", url))

# note: if you have problems downloading from https, you might need to include

# RCurl

download.file(url, zname, method = "libcurl")

}

unzip(zname, exdir="data")

# read the data into a dataframe

usgs_data <- read.csv(fn, stringsAsFactors = FALSE)

The number of records in this historical data set:

nrow(usgs_data)

## [1] 732580

Add some convenience columns. The date column can be derived with the standard as.Date() function. We use lubridate to convert the time column to a proper R time object, and then derive a year and era column:

# I know this could be done in one/two mutate() calls...but sometimes

# that causes RStudio to crash, due to the size of the dataset...

usgs_data <- usgs_data %>%

mutate(time = ymd_hms(time)) %>%

mutate(date = as.Date(time)) %>%

mutate(year = year(time)) %>%

mutate(era = ifelse(year <= 2000, "1995-2000",

ifelse(year <= 2005, "2001-2005",

ifelse(year <= 2010, "2006-2010", "2011-2015"))))

Remove all non-earthquakes and events with magnitude less than 3.0:

quakes <- usgs_data %>% filter(mag >= 3.0) %>%

filter(type == 'earthquake')

This leaves us with this about half as many records:

nrow(quakes)

## [1] 368440

# Create a spatial data frame----------------------------------

sp_quakes <- SpatialPointsDataFrame(data = quakes,

coords = quakes[,c("longitude", "latitude")])

sp_quakes@proj4string <- states_map@proj4string

# subset for earthquakes in the U.S.

xdf <- over(sp_quakes, states_map[, 'STUSPS'])

world_quakes <- cbind(sp_quakes, xdf)

states_quakes <- world_quakes %>% filter(!is.na(STUSPS))

Add a is_OK convenience column to states_quakes:

states_quakes$is_OK <- states_quakes$STUSPS == "OK"

Let’s make some (simple) maps

For mapping purposes, we’ll make a contiguous-states-only map, which again, I’m doing because I haven’t quite figured out how to project Alaska and Hawaii and their earthquakes in a practical way. So in these next few contiguous-states-only mapping exercise, we lose two of the most relatively active states. For now, that’s an acceptable simplification; remember that in the previous chapter, we saw how Oklahoma’s recent earthquake activity outpaced all of the U.S., including Alaska and Hawaii.

cg_map <- states_map[!states_map$STUSPS %in% c('AK', 'HI'), ]

cg_quakes <- states_quakes[!states_quakes$STUSPS %in% c('AK', 'HI'), ]

Trying to map all the quakes leads to the unavoidable problem of overplotting:

ggplot() +

geom_polygon(data = cg_map, aes(x = long, y = lat, group = group), fill = "white", color = "#777777") +

geom_point(data = cg_quakes, aes(x = longitude, y = latitude), shape = 1, color = "red", alpha = 0.2) +

coord_map("albers", lat0 = 38, latl = 42) +

theme_dan_map() +

ggtitle("M3.0+ earthquakes in U.S. since 1995")

So we want to break it down by era:

By era:

Legend hack: http://stackoverflow.com/questions/5290003/how-to-set-legend-alpha-with-ggplot2

ggplot() +

geom_polygon(data = cg_map, aes(x = long, y = lat, group = group), fill = "white", color = "#777777") +

geom_point(data = cg_quakes, aes(x = longitude, y = latitude, color = era), shape = 1, alpha = 0.2) +

coord_map("albers", lat0 = 38, latl = 42) +

guides(colour = guide_legend(override.aes = list(alpha = 1))) +

scale_colour_brewer(type = "qual", palette = "Accent") +

theme_dan_map() +

ggtitle("M3.0+ earthquakes in U.S. since 1995")

Small multiples

Again, we still have overplotting so break up the map into small multiples

ggplot() +

geom_polygon(data = cg_map, aes(x = long, y = lat, group = group), fill = "white", color = "#777777") +

geom_point(data = cg_quakes, aes(x = longitude, y = latitude), shape = 4, alpha = 0.2, color = "red") +

coord_map("albers", lat0 = 38, latl = 42) +

guides(colour = guide_legend(override.aes = list(alpha = 1))) +

theme_dan_map() +

facet_wrap(~ era) +

ggtitle("M3.0+ earthquakes in U.S. by time period")

Small multiples on Oklahoma

There’s definitely an uptick in Oklahoma, but there’s so much noise from the nationwide map that it’s prudent to focus our attention to only Oklahoma for now:

First, let’s make a data frame and spatial data frame of Oklahoma and its quakes:

# Dataframe of just Oklahoma quakes:

ok_quakes <- filter(states_quakes, is_OK)

# map of just Oklahoma state:

ok_map <- states_map[states_map$STUSPS == 'OK',]

Mapping earthquakes by era, for Oklahoma:

ggplot() +

geom_polygon(data = ok_map, aes(x = long, y = lat, group = group), fill = "white", color = "#777777") +

geom_point(data = ok_quakes, aes(x = longitude, y = latitude), shape = 4, alpha = 0.5, color = "red") +

coord_map("albers", lat0 = 38, latl = 42) +

theme_dan_map() +

facet_wrap(~ era) +

ggtitle("Oklahoma M3.0+ earthquakes by time period")

Broadening things: Let’s not make a map

Within comparisons: OK year over year

It’s pretty clear that Oklahoma has a jump in earthquakes from 2011 to 2015.

But there’s no need to use a map for that.

TK histogram

Look at Oklahoma, year to year

Let’s focus our look at just Oklahoma:

ggplot(data = ok_quakes, aes(year)) +

scale_y_continuous(expand = c(0, 0)) +

geom_histogram(binwidth = 1, fill = "#DDCCCC") +

geom_histogram(data = filter(ok_quakes, year >= 2012 & year < 2015), binwidth = 1,

fill="#990000") +

geom_histogram(data = filter(ok_quakes, year == 2015), binwidth = 1, fill="#BB0000") +

stat_bin( data = filter(ok_quakes, year >= 2012),

aes(ymax = (..count..),

# feels so wrong, but looks so right...

label = ifelse(..count.. > 0, ..count.., "")),

binwidth = 1, geom = "text", vjust = 1.5, size = 3.5,

fontface = "bold", color = "white" ) +

ggtitle("Oklahoma M3+ earthquakes, from 1995 to August 2015")

Let’s be more specific: by month

Oklahoma earthquakes by month, since 2008

# This manual creation of breaks is the least awkward way I know of creating a

# continuous scale from month-date and applying it to stat_bin for a more

# attractive graph

mbreaks = as.numeric(seq(as.Date('2008-01-01'), as.Date('2015-08-01'), '1 month'))

ok_1995_to_aug_2015_by_month <- ggplot(data = filter(ok_quakes, year >= 2008),

aes(x = floor_date(date, 'month')), y = ..count..) +

stat_bin(breaks = mbreaks, position = "identity") +

stat_bin(data = filter(ok_quakes, floor_date(date, 'month') == as.Date('2011-11-01')),

breaks = mbreaks, position = 'identity', fill = 'red') +

scale_x_date(breaks = date_breaks("year"), labels = date_format("%Y")) +

scale_y_continuous(expand = c(0, 0)) +

annotate(geom = "text", x = as.Date("2011-11-01"), y = 50, size = rel(4.5), vjust = 0.0, color = "#DD6600", family = dan_base_font(),

label = "November 2011, 10:53 PM\nRecord M5.6 earthquake near Prague, Okla.") +

ggtitle("Oklahoma M3+ earthquakes since 2008, by month")

# plot the chart

ok_1995_to_aug_2015_by_month

Let’s go even more specific:

Focus on November 2011. I’ll also convert the time column to Central Time for these examples:

nov_2011_quakes <- ok_quakes %>%

mutate(time = with_tz(time, 'America/Chicago')) %>%

filter(year == 2011, month(date) == 11)

ggplot(data = nov_2011_quakes, aes(x = floor_date(date, 'day'))) +

geom_histogram(binwidth = 1, color = "white") +

scale_x_date(breaks = date_breaks('week'),

labels = date_format("%b. %d"),

expand = c(0, 0),

limits = c(as.Date("2011-11-01"), as.Date("2011-11-30"))) +

scale_y_continuous(expand = c(0, 0)) +

theme(axis.text.x = element_text(angle = 45, hjust = 0.8)) +

ggtitle("Oklahoma M3+ earthquakes during the month of November 2011")

We see that a majority of earthquakes happened on November 6, the day after the 5.6M quake in Prague on November 5th, 10:53 PM. Let’s filter the dataset for the first week of November 2011 for a closer look at the timeframe:

firstweek_nov_2011_quakes <- nov_2011_quakes %>%

filter(date >= "2011-11-01", date <= "2011-11-07")

# calculate the percentage of first week quakes in November

nrow(firstweek_nov_2011_quakes) / nrow(nov_2011_quakes)

## [1] 0.625

Graph by time and magnitude:

big_q_time <- ymd_hms("2011-11-05 22:53:00", tz = "America/Chicago")

ggplot(data = firstweek_nov_2011_quakes, aes(x = time, y = mag)) +

geom_vline(xintercept = as.numeric(big_q_time), color = "red", linetype = "dashed") +

geom_point() +

scale_x_datetime(breaks = date_breaks('12 hours'), labels = date_format('%b %d\n%H:%M'),

expand = c(0, 0),

limits = c(ymd_hms("2011-11-03 00:00:00"), ymd_hms("2011-11-08 00:00:00"))) +

ylab("Magnitude") +

ggtitle("M3.0+ earthquakes in Oklahoma, first week of November 2011") +

annotate("text", x = big_q_time, y = 5.6, hjust = -0.1, size = 4,

label = "M5.6 earthquake near Prague\n10:53 PM on Nov. 5, 2011", family = dan_base_font()) +

theme(panel.grid.major.x = element_line(linetype = 'dotted', color = 'black'),

axis.title = element_text(color = "black"), axis.title.x = element_blank())

As we saw in the previous chart, things quieted down after November 2011, up until mid-2013. So, again, it’s possible that maybe the general rise of Oklahoma earthquakes is due to a bunch of newer/better seismic sensors since 2013. However, this is only a possiblity if we were doing our analysis in a vaccum divorced from reality. Practically speaking, the USGS likely does not go on a state-specific sensor-installation spree, within a period of two years, in such a way that massively distorts the count of M3.0+ earthquakes. However, in the next section, we’ll step back and see how unusual Oklahoma’s spike in activity is compared to every other U.S. state.

But as a sidenote, the big M5.6 earthquake in November 2011, followed by a period of relative inactivity (at least compared to 2014) does not, on the face of it, support a theory of man-made earthquakes. After all, unless drilling operations just stopped in 2011, we might expect a general uptick in earthquakes through 2012 and 2013. But remember that we’re dealing with a very simplified subset of the earthquake data; in chapter 4, we address this “quiet” period in greater detail.

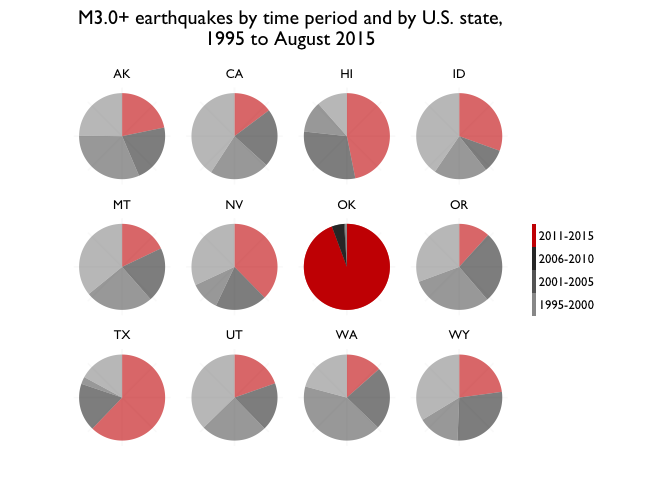

Where does Oklahoma figure among states?

We’ve shown that Oklahoma is most definitely experiencing an unprecedented (at least since 1995) number of earthquakes. So the next logical question to ask is: is the entire world experiencing an uptick in earthquakes?. Because if the answer is “yes”, then that would make it harder to prove that what Oklahoma is experiencing is man-made.

Earthquakes worldwide

First step is to do a histogram by year of the world_quakes data frame. In the figure below, I’ve emphasized years 2011 to 2015:

ggplot(data = world_quakes, aes(x = year, alpha = year >= 2011)) +

geom_histogram(binwidth = 1) +

scale_alpha_discrete(range = c(0.5, 1.0)) +

scale_y_continuous(expand = c(0, 0)) +

guides(alpha = FALSE) +

ggtitle("M3.0+ earthquakes worldwide by year from 1995 to August 2015")

At a glance, it doesn’t appear that the world is facing a post-2011 surge, at least compared compared to what it’s seen in 2005 to 2007. I’ll leave it to you to research those years in seismic history. In any case, I’d argue that trying to quantify a worldwide trend for M3.0+ earthquakes might be futile. To reiterate what the USGS “Earthquake Facts and Statistics” page says, its earthquake records are based on its sensor network, and that network doesn’t provide a uniform level of coverage worldwide:

The USGS estimates that several million earthquakes occur in the world each year. Many go undetected because they hit remote areas or have very small magnitudes. The NEIC now locates about 50 earthquakes each day, or about 20,000 a year. As more and more seismographs are installed in the world, more earthquakes can be and have been located. However, the number of large earthquakes (magnitude 6.0 and greater) has stayed relatively constant.

USGS records for the U.S. compared to the world

Let’s take a detour into a bit of trivia: let’s assume (and this is obviously an incredibly naive assumption when it comes to Earth’s geological composition) that earthquakes occur uniformly across the Earth’s surface. How many earthquakes would we expect to be within the U.S.’s borders?

The U.S. land mass is roughly 9.1 million square km. The world’s surface area is roughly 510,000,000 square km:

9.1 / 510

## [1] 0.01784314

The percentage of U.S.-bounded earthquakes in our dataset can be calculated thusly:

nrow(states_quakes) / nrow(world_quakes)

## [1] 0.05483389

Again, it is basically just wrong to assume that earthquake activity can be averaged across all of Earth’s surface area. But I don’t think it’s far off to assume that the USGS has more comprehensive coverage within the United States.

Let’s look at the histogram of world_quakes, with a breakdown between U.S. and non-U.S. earthquakes:

U.S. vs Non US:

#p_alpha <- ifelse(world_quakes$year >= 2011, 1.0, 0.1)

ggplot(data = world_quakes, aes(x = year, fill = is.na(STUSPS))) +

geom_histogram(binwidth = 1, aes(alpha = year >= 2011)) +

scale_fill_manual(values = c("darkblue", "gray"), labels = c("Within U.S.", "Outside U.S.")) +

scale_alpha_discrete(range = c(0.5, 1.0)) +

scale_y_continuous(expand = c(0, 0)) +

guides(fill = guide_legend(reverse = TRUE), alpha = FALSE) +

ggtitle(expression(

atop("M3.0+ earthquakes worldwide, 1995 to August 2015",

atop("U.S. versus world")),

))

With U.S. making up only 5% of the data, it’s too difficult to visually discern a trend. So let’s just focus on the states_quakes data frame.

U.S. earthquakes only

Let’s make a histogram of U.S. earthquakes only:

ggplot(data = states_quakes, aes(x = year, alpha = year >= 2011)) +

geom_histogram(binwidth = 1, fill = "darkblue") +

scale_alpha_discrete(range = c(0.5, 1.0)) +

scale_y_continuous(expand = c(0, 0)) +

guides(alpha = F) +

ggtitle("M3.0+ U.S. earthquakes by year, 1995 to August 2015")

The year-sized “buckets” may make it difficult to see the trend, so let’s move to a monthly histogram.

In a subsequent example, I want to use geom_smooth() to show a trendline. I know there’s probably a way to do the following aggregation within stat_bin(), but I don’t know it. So I’ll just make a data frame that aggregates the states_quakes data frame by month.

states_quakes_by_month <- states_quakes %>%

mutate(year_month = strftime(date, '%Y-%m')) %>%

group_by(year_month) %>%

summarise(count = n())

# I'm only doing this to add prettier labels to the next chart...I'm sure

# there's a more conventional way to do this if only I better understood

# stat_bin...oh well, I need lapply practice...

c_breaks = lapply(seq(1995, 2015, 2), function(y){ paste(y, "01", sep = '-')})

c_labels = seq(1995, 2015, 2)

The monthly histogram without a trendline (note that I use geom_bar() instead of geom_histogram(), since states_quakes_by_month already has a count aggregation:

ggplot(data = states_quakes_by_month,

aes(x = year_month, y = count, alpha = year_month >= "2011")) +

geom_bar(stat = 'identity', fill = "lightblue") +

scale_alpha_discrete(range = c(0.5, 1.0)) +

scale_y_continuous(expand = c(0, 0)) +

scale_x_discrete(breaks = c_breaks, labels = c_labels) +

guides(alpha = F) +

ggtitle("M3.0+ U.S. earthquakes by month, 1995 to August 2015")

Note: If you’re curious why there’s a spike in 2002, I direct you to the Wikipedia entry for the 2002 Denali earthquake.

Let’s apply a trendline to see the increase more clearly:

ggplot(data = states_quakes_by_month,

aes(x = year_month, y = count, group = 1, alpha = year_month >= "2011")) +

geom_bar(stat = 'identity', fill = "lightblue") +